Bots have a knack of retaining knowledge and improving as they are put to greater use. They have built-in natural language processing (NLP) capabilities and are trained using machine learning techniques and knowledge collections. Just like humans evolve through learning and understanding, so do bots.

A glance into NLP and conversation design

Say something to a bot and the bot breaks down your utterance into words and phrases to understand what you mean... just like humans detect your intentions through the words used to express them.

You interact with bots to get things done – actions you want the bots to perform, tasks you want them to complete. Actions are described by verbs - fetch something. Fetch is the action verb; that ‘something’ is an object, also understood by the bot. Together, they represent the task. Simplistically, the utterance is detected for an action verb, an object and a modifier (how+what, implying… the goal is to…)

In the realm of natural language processing, the goal of the user is called an ‘intent’ and that something is an ‘entity’ – modifies an intent, could be a name, place, thing, location, time etc. For example:

Utterance (goal) -> Fetch weather report

Intent -> Fetch

Entities -> weather, report

Other examples: Create new ticket, Cancel my tickets, Search product… these are simple examples. Human expressions are complex and variegated.

Also Read: Chatbot NLP: Adding an Insurance Policy to Machine Learning |

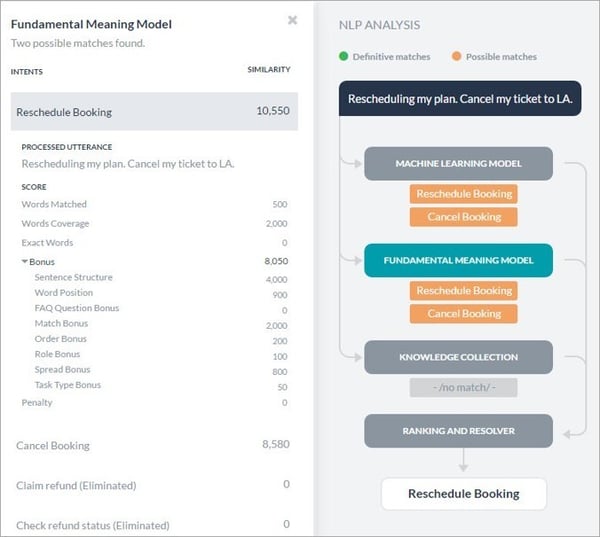

Semantic Intelligence (Fundamental Meaning)

Bots are equipped with such semantic intelligence and are programmed with computational linguistics, including parts of speech, sentence structure etc., to understand the fundamental meaning of words based on their position, conjugation, capitalization, plurality, and other factors. Bots tap into a language corpus and built-in dictionaries to analyze and recognize user intents.

Machine Learning

In addition, there is machine learning – training the bots with synonyms and patterns of words, phrases, slang, and sentences. Machine capabilities are exploited to train the bot to understand alternate ways of expressing the same meaning in similar contexts. These alternate expressions are mapped to the utterances (intents), so the bot knows they mean the same thing. Machine learning models constantly expand the language model, intent and entity recognition of the bot. There are many other advanced techniques.

Knowledge Collection

Knowledge graphs enable you to organize information in a way that makes it easy for bots to crawl, search and extract the information the user is looking for. You create structured ontologies and then apply the same machine learning techniques to improve intent recognition and response generation.

As user utterances get more complex, the bots become more interactive.

A combination of the above techniques is employed to score utterances and arrive at the correct intent. Bots have the intelligence to engage users till they understand the complete meaning of the utterance to enable them to recognize intents, extract entities and complete tasks.

Designing the conversation

A conversation has many elements – greetings, the small talk, questions, clarifications, confirmations, messages, prompts, and responses etc., that make up the interactions – with the final goal of completing a task.

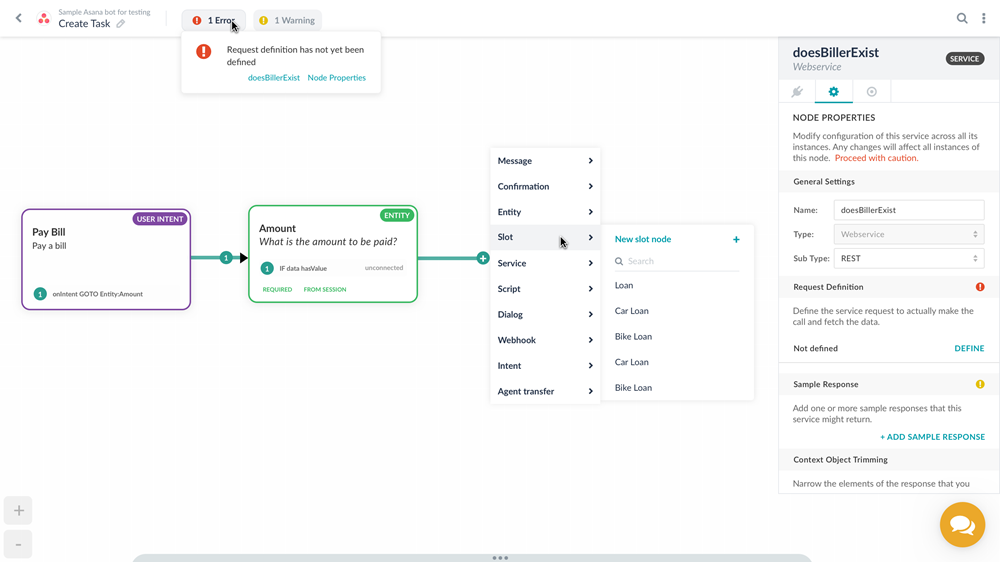

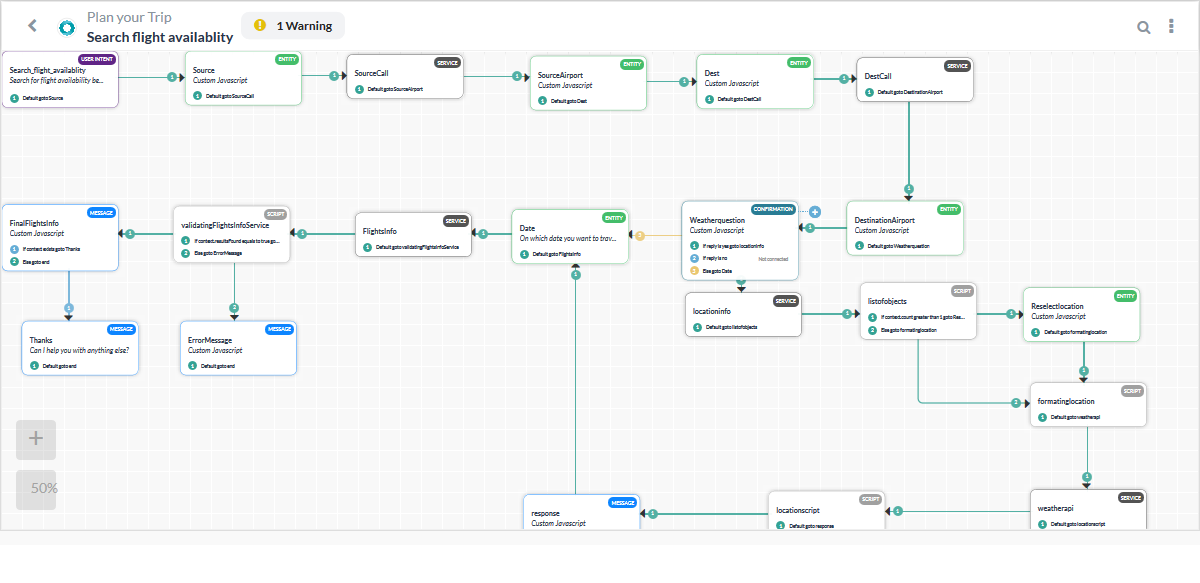

If you graphically represent a conversation between the user and a bot, the design would look like a tree, with a root node and several branches, based on the conversation paths and progression of the dialog.

The nodes are linked together to control the flow of conversations by defining the conditions in each node. You can define simple “if-else” conditions or write a rule-based expression of a condition. Some examples:

User Intent Node: At the root would be the primary intent, identified from the user utterance, that branches out into various nodes based on the responses and pre-conditions. There can also be sub-intents or multiple intents, having their own branches.

Entity Node: Entity nodes prompt the user for an input. Sample entity types include name, location, amount, due date etc., data to be extracted from the user to complete a task. Entity nodes collect a series of inputs to complete a transaction.

Bot Response Node: Deliver a message from the bot to the user. Message nodes commonly follow an API, web service call, or webhook event to define the results as a formatted response.

Script Node: Write JavaScript code that indicates a step for the bot to take. For example, manipulate user input parameters before executing an API call, display custom error messages to the user, make decisions based on complex business rules etc.

Service Node: Allows you to call an API or a set of APIs - make REST or SOAP requests to third-party web services.

Confirmation Node: Allows you to prompt the user for a “yes” or “no” answer: It helps in verification or offers users choices to accept or decline a step in the process, such as, deliver a confirmation before an API execution.

WebHook Node: Used for server-side validation, executing business logic, or making a backend server API calls. To use this node, you need to install an SDK Tool Kit.

Agent Transfer Node: Used to transfer the conversation from the bot to a human agent.

Also Read: Natural Language Processing 101: A (Mostly) Jargon-Free Guide To NLP And Its Role In Modern Consumerism |

Component Transitions

Task flows built with different nodes are connected by transitions. The task transitions depend on conditions that use business-defined evaluations to take the next steps within the task flows. You can define If-then-else conditions using a set of predefined operators and specify fallback conditions.

The dialog builder must give developers control over conversational flows by allowing them to define intent and entity nodes and make conversation optimization a continuous process.

Conversational Platform Advantages

Global definition of intents and entities: Intents and entities can be defined at a global level, so they can be leveraged by developers across dialog tasks.

Component reusability: Developers can easily select a starting point from previously developed chatbot components or modify reused components such as authentication profiles and nodes to be used in various dialogs.

Support for intents and sub-intents: Dialog builders must be equipped with intent and sub-intent recognition capabilities. While every dialog task starts with a root intent, developers must be able to build complex flows with other associated intents (or sub-intent nodes), allowing the bot to "take a turn" mid conversation and still return to execute an original request.

Pause and resume functionality: During the dialog flow execution, if a user sends a message that does not relate to the current dialog flow, the platform must be able to handle this as a separate intent, putting the current intent on hold while the new intent is executed. Once complete, the user must be returned to the current dialog and the original context be carried over.

Advantages of a multi-pronged NLP for enterprises

Faster bot training: The fundamental meaning (semantic understanding) approach means less data is required to optimize natural language processing.

Easy oversight of training data: Because machine learning uses massive amounts of data, you can’t easily look at a long list of training sentences and see what you’ve missed. On the contrary, with fundamental meaning, it’s easy to look over a list of synonyms or a small set of idiomatic sentences and see what has been overlooked.

Automatic handling of conjugated words: NLP engines have a dictionary and understands the relationship of word conjugations, so it doesn’t have to process all verb tenses as different words (unique data).

Fewer false positives: Administrators have less need to trawl through success logs looking for rare wrong interpretations.

Higher user satisfaction and adoption: A bot that understands better and processes intent and requests correctly is going to be more useful to employees and customers. It will also be more satisfying to use and reduce frustration from failed inputs.

Ability to extend the Platform: The Platform provides logic exits where companies can extend the platform to leverage their own machine learning models or custom task implementations wherever necessary for abstract tasks.

Also Read: How NLP Technology Is Redefining Development Agility |

What's in it for bot users

Fewer false positives: Just as this is beneficial to the enterprise from a deployment perspective, it’s also beneficial to the user from an user experience perspective. The user gets a more consistently successful experience because the bot doesn’t do things incorrectly, it only prompts the user for more information.

Simpler user interface: In the case of a multi-pronged approach, you don’t have to change your speech pattern to use a bot correctly. Rather than catering your input to the bot, the bot caters to your input, which not only makes it simpler to use, but makes it consistent across channels and devices. It’s a marked alternative to GUI systems.

Understand context: The bot can understand, remember and leverage contextual information in the conversational dialog with the user to provide a personalized bot experience.