If we look at communication between humans and computers from a historical perspective, we’re not far removed from the age of hieroglyphics and cave drawings. Graphical user interfaces (GUI) have been, and still remain, the dominant user interface, especially for customer-facing channels like websites and mobile apps. A GUI exchange in typical ecommerce goes something like this: A customer is presented with icons and visual indicators, the customer interprets the visual language, makes a choice on where to take the interaction, rinse and repeat.

But what we know now is, people love to chat. They really love it. As chat becomes the dominant method of communication across the globe, there are several things holding GUI back.

A) It doesn’t utilize the human language, therefore humans have to learn to speak “computerese” by recognizing the meanings of icons, and entering an obscure sequence of clicks and keystrokes.

B) It’s easy for companies to mine data with GUI interfaces, but getting harder for them to make heads or tails of what drives engagement, which hurts their ability to give their customers optimized and personal service.

C) You can’t communicate with the computer except from specific media. You can’t email it, or text it, or call it.

Now, more and more companies are turning to natural language processing, or NLP, as a solution to these fundamental issues with the traditional interface. As Bruce Wilcox, four time Loebner Prize winner and chatbot expert, states – “People desperately want to bond with things, whether it is other people, pets, cars or software.” The best way to bond with something is to talk to it! But there also needs to be mutual understanding. The need for two-way human-to-computer conversation is driving NLP technology, but there’s still much people don’t understand about it, so we’re here to break it down piece by piece.

Also Read: How NLP Technology Is Redefining Development Agility |

What is Natural Language Processing?

NLP allows humans to bypass programming languages to speak to computers and instead use normal human speech. It basically breaks down the barriers of communication by allowing anyone, whether they have computing knowledge or not, to talk to bots, systems, apps, or any kind of software.

The problem is, human speech is much broader and more imprecise than programming language, so just because you can talk to a system doesn’t mean it will always understand you.

Here’s why. Let’s say I want to buy some flooring for my home, so I ask retail chat bot to “Find me the nearest store that sells vinyl.” Well in this case vinyl could mean many things. Vinyl records. Vinyl wrap. Vinyl fencing. The bot may guess correctly and give me stores that sell vinyl flooring, but chances are it would have to ask me if that was my intention first, and that methodology sums up the beauty of NLP. The system can just turn around and ask you if it isn’t sure what you mean.

Also Read: The Weekly Enterprise Customer Q&A: RPA vs. Chatbots, GUI Support, and NL Training Timelines |

The role of Machine Learning and Artificial Intelligence in NLP

Machine learning, by definition, is a type of artificial intelligence that provides computers the ability to learn without being explicitly programmed. It’s similar to data mining in that both systems search data to look for patterns. Machine learning can help NLP powered systems adjust actions according to the historical context and patterns it picks up in a conversation. For instance, if I ask a banking bot “pay my electric bill” and that request happens to fall near the 25th of each month for three straight months, the bot can then anticipate my request and automate the task.

NLP technology is human-like in the sense that more conversation can lead to better comprehension. GUI interfaces won’t understand me better the more I use them, they’ll only know that I’m reacting to them similarly or differently. If I don’t perform the intended response, a developer will have to change the visual indicator all-together. You can start to see how this might cause problems for companies developing customer facing apps and websites. With conversational assistants, these systems can get real-time feedback on the user experience in their own words.

These are other steps natural language processing engines use to better comprehend human speech.

Also Read: It Takes A Village To Raise an NLP Powered Bot |

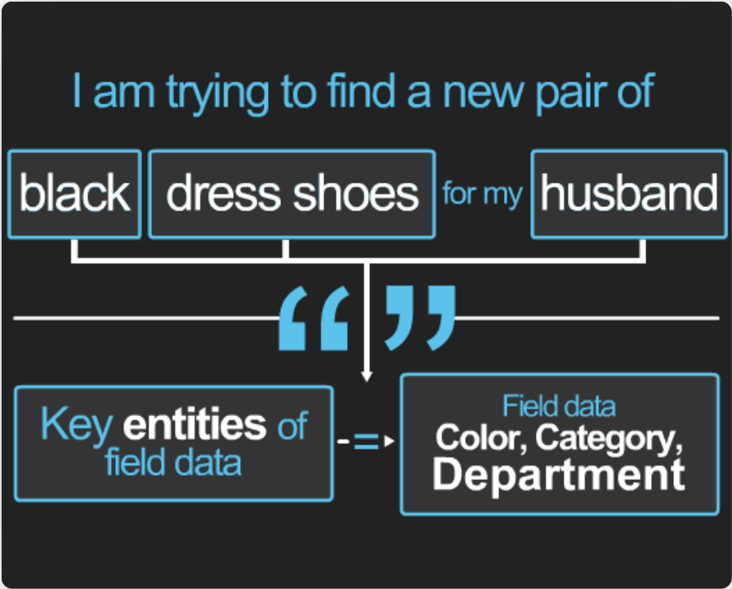

- Input parsing - Unlike keyword searches where word order is important, NLP systems actually parse your input because they can understand subject-verb-object. “I want to buy a diamond ring not mined in South Africa” isn’t something you could hand over to Amazon’s search and expect it to get the proper results. But a commerce bot with NLP could search through the fine print of product entries and exclude South Africa from the choices.

- Synonyms - Just like when you talk with a friend, you can use dozens of words interchangeably as a verb, noun, or any other part of speech. Mapping keywords and triggering synonyms for tasks is essential to more advanced human-to-computer communication.

- Interactivity - The conversational nature of NLP not only helps create a more enjoyable user experience, but it help clarify requests, prompt for missing data, summarize request results, and ask permission to proceed in some cases.

The study and use of Natural Language Processing can get much more granular, and if you’re looking for more information on Kore.ai’s own NLP engine, we’ve got it. Global consumers are pushing this technology into the mainstream, and retailers, banks, healthcare organizations, insurance companies, and the like are going to be responsible for facilitating the conversation between humans and systems. After all, what now many seem like “small talk” is the future of big business.