For most enterprise technology endeavors, there is a cost benefit analysis to innovation. Companies routinely must choose between launching quickly and launching correctly. If speed is the primary driver, quality suffers; alternatively, if quality is the primary driver, speed suffers.

The same is true when it comes to chatbot development, specifically the natural language processing component (NLP). Believe it or not, chatbots don’t come right out of the gate with the ability to understand human speech, they need to be trained just like a human would before going out in the real world and conversing. Machine learning (ML) is the most common way developers can NL-enable a bot to talk to people, systems, and things. But, Machine Learning requires a substantial amount of time, work, and most importantly – data – to create a bot that can accurately interpret and respond to predetermined inputs.

When it comes to natural language training, the essential question is “can Machine Learning alone solve for both quality of the chatbot’s NL intelligence and the enterprise’s need for speed to market?” The answer to that question, at least for now, is complicated. Under an ML model development cycles for complex chatbots can quickly elongate, and time to deployment becomes a business issue in many instances. The greater the accuracy (viz., quality) the chatbot demands, the longer it takes to train it. That’s the conventional wisdom for most enterprises hoping to build intelligent chatbots, but it doesn’t have to be.

Also Read: Natural Language Processing 101: A (Mostly) Jargon-Free Guide To NLP And Its Role In Modern Consumerism |

Analyzing Machine Learning and Chatbots

To fully understand why ML presents a game of give-and-take for chatbot training, it’s important to examine the role it plays in how a bot interprets a user’s input. The common misconception is that ML actually results in a bot understanding language word-for-word. To get at the root of the problem, ML doesn’t look at words themselves when processing what the user says. Instead, it uses what the developer has trained it with (patterns, data, algorithms, and statistical modeling) to find a match for an intended goal. In the simplest of terms, it would be like a human learning a phrase like “Where is the train station” in another language, but not understanding the language itself. Sure it might serve a specific purpose for a specific task, but it offers no wiggle room or ability vary the phrase in any way.

To learn like this – the ML way - requires huge amounts of data and teaching to achieve an acceptable degree of accuracy. With ML, it typically takes around 1,000 examples to develop a degree of accuracy that produces positive user experiences.

When an insufficient amount of data exists during the pre-deployment stage – which is usually the case without users to supply it - bot developers must relegate themselves to developing custom rules to identify the intent of a message. The simplest of rules may involve something like “if a sentence contains the word ‘forecast,’” then the user is asking about the weather. It sounds like a simple enough fix, but when a conversation is longer and more complex, the level of accuracy decreases quickly and the bot is prone to false positives and the user experience suffers. Not good if you’ve spent time and resources on a bot that’s a pain to use!

Using Fundamental Meaning to Aid Your Chatbot’s NLP

Fundamental Meaning is an approach to NLP that’s all about understanding words themselves. Each user utterance is broken down word-for-word, as if the chatbot were in school breaking down a sentence on the chalkboard. During this process, it’s looking for two things – intent (what the user is asking it to do) and entities (the necessary data needed to complete a task).

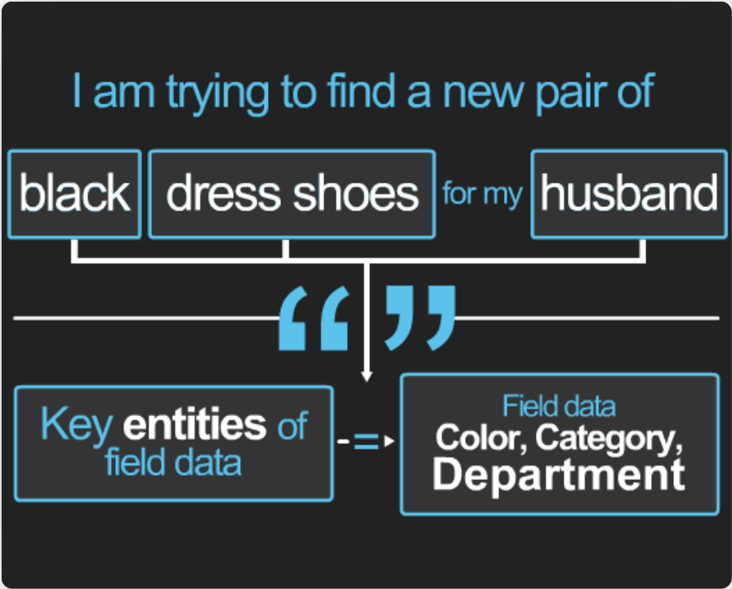

For example, if a user types this request to a shopping chatbot:

“I am trying to find a pair of black dress shoes for my husband”

The chatbot would then break the utterance down to the essentials (verbs and nouns), to determine the intent would be “find shoes.” Since that chatbot now knows what its supposed to be doing, it can look for entities. In this scenario “black” (color) and “dress” (category) and “husband” (men’s department) give the bot an idea of where to start.

Because a chatbot using FM possesses a basic understanding of language, it can recognize common synonyms of a command and determine its intended action. As developers think of new synonyms for words like “find” (i.e. search, look for, get, display, show) and “shoes” (wingtips, square toes) they can add them to build the chatbot’s vocabulary and increase its intelligence. Then a chatbot could process an utterance like “Display options for black wingtips” identically to our example sentence.

The Kore.ai Approach: Don’t Play Guessing Games With Your Users

The NLP engine for Kore.ai’s Bots Platform combines ML with fundamental meaning (FM), thereby relieving most of the problems with an ML-only bot approach. Using a multipronged model, bot accuracy improves while development cycles are slashed and the ability to spot failure to interpret categories becomes easier.

Also Read: How NLP Technology Is Redefining Development Agility |

ML-only chatbots are essentially optimistic. Their primary function is to try and match a user’s utterance to the closest piece of data it already knows, i.e. it’s making an educated guess, and inevitably it’s going to guess wrong and frustrate a user. In contrast, chatbots using FM err on the side of pessimism. If they can’t identify the intent or entities within a sentence, they ask additional questions to gain more information and clarification. Adding ML to FM enables developers results in a more well-rounded NLP engine, allowing developers to fill gaps in communications by resolving conflicts between idiomatic phrases.

Measuring the Benefits

A multi-pronged NLP bot model using both ML and FM presents advantages to both chatbot developers and users.

For developers, the following are some of the most notable business outcomes:

- Faster bot training. Rather than supplying hundreds or thousands of sentences to train the bot, as required with an ML-only approach, developers only need to supply a few synonyms and field data when using FM to create tasks for their bot. (Of course, it goes without saying the more synonyms the better.)

- Simpler training data. With ML, all verb tenses, singular or plural nouns, adjectives, and adverbs are treated as unique pieces of data, and that can add a ton of upfront work for developers when training a bot. FM understands known language patterns and is able to easily account for these variations without the need to explicitly train the bot for a simple change like “find ATM” vs “find ATMs.”

- Maturing intelligence. Unlike ML, which has no concept of sentence structure, FM treats each sentence separately. Thus, chatbots are able to process more complex requests, even prioritize intents within an utterance. Adding ML to FM also allows developers to increase the intelligence of bots and improve the accuracy of input interpretation over time as they get more data.

- Easier identification of missing elements. With ML, missing data elements are not easy to find. With FM, developers simply need to look through a list of synonyms and a small set of sentences.

- Fewer false positives. A combined ML and fundamental meaning approach reduces the number of false positives. Thus, developers can spend less time trawling through logs to find inaccurate interpretations.

For users, fewer false positives translate into a superior user experience, and since chatbots store their data, the bot actually gets to “know” them over time. Things like preferences, billing addresses, account numbers, birthdays, anything a bot can use to help make tasks easier and faster, get stored. The more conversations a user has with a bot, the more it learns and the more useful it gets.

Also Read: The Weekly Enterprise Customer Q&A: RPA vs. Chatbots, GUI Support, and NL Training Timelines |

Not One, But Both

A Fundamental Meaning approach to NLP helps organizations get chatbots off the ground faster and easier, while ML enables developers to resolve idiomatic phrase conflicts and to add utterances to the lexicon library. The two combine to enable not only faster NLP development but more thorough NLP processing. For enterprises, a chatbot strategy doesn’t have to be a choice between one or the other. Now it can be both.

Learn how to further define, develop, and execute your chatbot strategy with our CIO Toolkit.