We are six weeks into 2025, and already a number of developments have taken the world by storm. In article I build on my recent conversation with Carlos Campos about what the future holds.

AI Agents & Agentic Spectrums

Recently HuggingFace defined AI Agents as programs where LLM outputs control a workflow. I find this definition interesting as it redefines the notion of AI Agents being a binary concept that exist as a yes or no.

But instead, agency exists on a continuous spectrum, depending on how much control or influence you grant the LLM-based application over your workflow.

Organisations should avoid AI Agents when tasks are simple, deterministic & can be solved with predefined workflows.

Any effective AI-powered system will inevitably need to provide LLMs with some level of access and integration to the real world. (This is where tools come into play.) For example, this could include the ability to call a search tool for external information or interact with specific programs to complete a task.

This integration was always seen as APIs and code, but recent development focussed on using the GUI as the interface for the AI Agents. Where the GUI becomes the point of integration for the AI Agent, as it is the point of integration for humans.

Agentic Workflows

Agentic Workflows as a lower level of Agency...

Everyone is agreeing that modern knowledge work is broken, with various numbers being stated. One of the reports stated that workers spend 30% of their time searching for information.

There is also a challenge for knowledge workers in answering complex questions and needing to synthesise information from various documents.

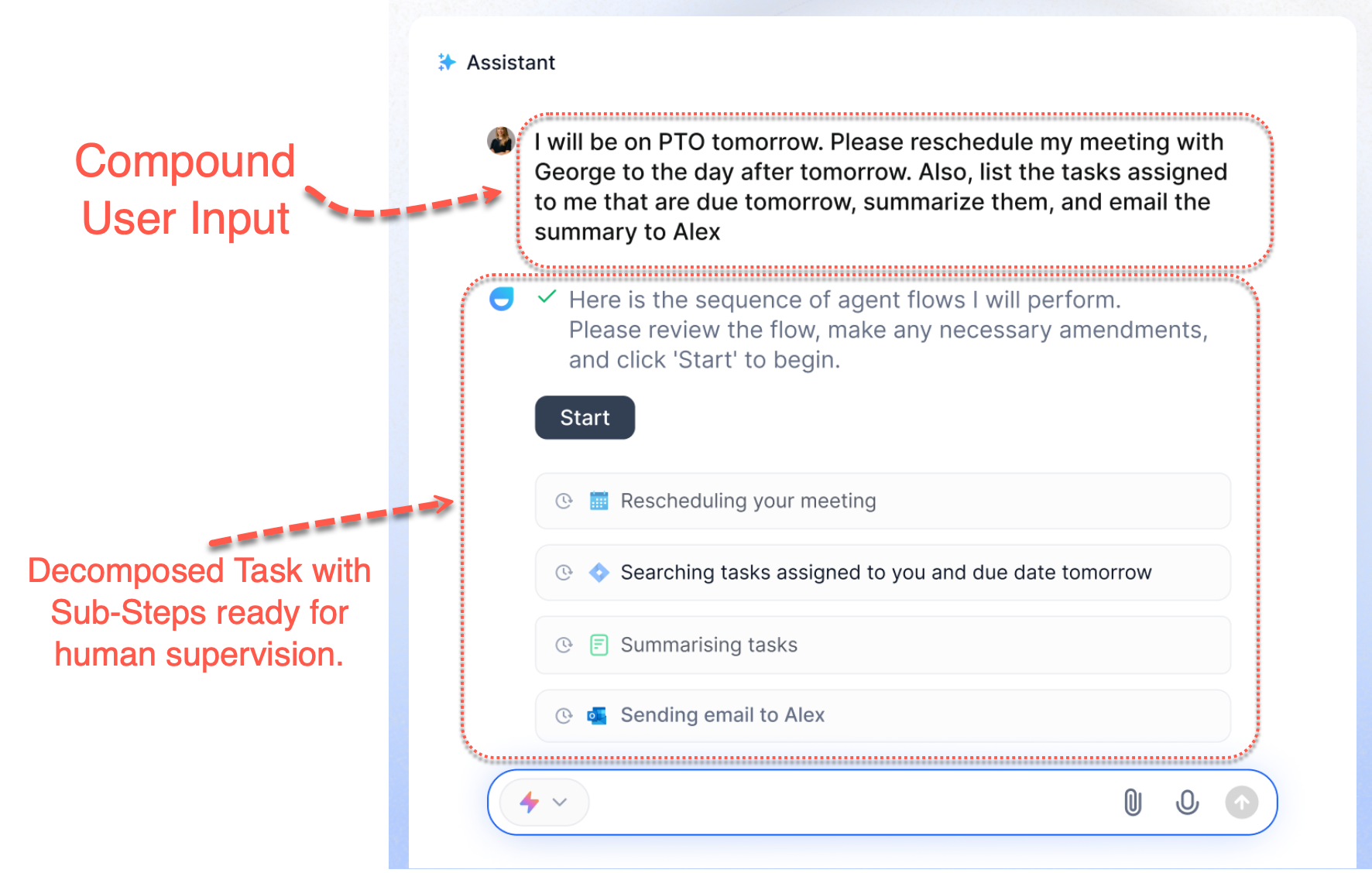

Agentic Workflows (as shown in the image below) allows for reasoning and decomposing complex tasks into simpler sub-tasks and chaining these tasks together in a sequence.

By executing this sequence, elements like observability, inspectability and discoverability are introduced.

The synthesis of data will become increasingly important. Agentic Workflows for knowledge workers is one such example where work data and resources can be synthesised for the worker into one answer.

Language Model providers are moving away from only offering the model, as such. And also extending into User Experience. Deep Research in ChatGPT is not a new model, but rather a new agentic capability within ChatGPT which conducts multi-step research on the internet for complex tasks. It accomplishes in tens of minutes what would take a human many hours.

This is also a good example of how disparate sources of data is synthesised to answer a user question.

Model Capability

Future AI models will prioritise specific capabilities, such as reasoning, planning and problem-solving, rather than serving as vast repositories of knowledge.

This shift will enable models to be more efficient, reducing computational demands while improving performance in specialised tasks.

Instead of memorising facts, these models will focus on interpreting data, drawing logical conclusions, and adapting to new information dynamically.

By training AI for targeted skills rather than sheer knowledge, developers can create more agile, domain-specific, and cost-effective solutions.

Reasoning

The OpenAI o1 model is where OpenAI introduced reasoning, however the reasoning was performed behind the scenes under the hood. The user was not given insights into the reasoning, even though the user paid for this.

One of the factors for the DeepSeek phenomenon, is the fact that DeepSeek Chat streams the reasoning the model works through in order to reach the final answer.

A key factor of reasoning is that that it as a form of internal chain-of-thought where a complex question is decomposed into sub-steps which are followed in a sequential fashion. Hence reasoning has traits of AI Agents where where decomposition takes place and a chain of thought and action is formed.

Reasoning is a tool which can be used in various aspects, there is recent research from OpenAI where knowledge documents are converted into procedures which can be of help to customers or agents.

Reasoning provides insight into problem-solving, thought processes, and data synthesis to achieve a goal.

Small Language Models & Orchestration

Small Language Models (SLMs) will become more efficient, enabling edge devices and offline applications to leverage AI without heavy computational costs.

As enterprises seek cost-effective AI solutions, orchestration frameworks will allow seamless integration of multiple SLMs, optimising performance across tasks.

Future AI systems will dynamically switch between SLMs and larger models based on context, balancing speed, accuracy, and resource consumption.

Open-source and fine-tuned SLMs will empower businesses to deploy domain-specific AI without relying on massive, centralised models. Advances in orchestration will drive AI interoperability, enabling modular architectures where multiple specialised models collaborate in real-time.

You can listen to our discussion here.