How can large language models (LLMs) be used to enhance the contact center agent experience and improve customer service? Automating customer conversations with empathetic responses can help businesses provide a more personalized experience that increases customer satisfaction and improves brand loyalty. It can also help businesses reduce agent workload, lower operational costs, and improve overall efficiency in the contact center.

Today we’re going step by step through a use case example of how LLMs can be used to enhance the contact center agent experience. We’ll provide a visual demonstration of how LLMs can automate conversations with customers, provide empathetic responses and allow quick access to frequently asked questions in the contact center. This example illustrates how LLMs (Language Model Models) can help businesses streamline the customer service process and enhance the overall customer experience.

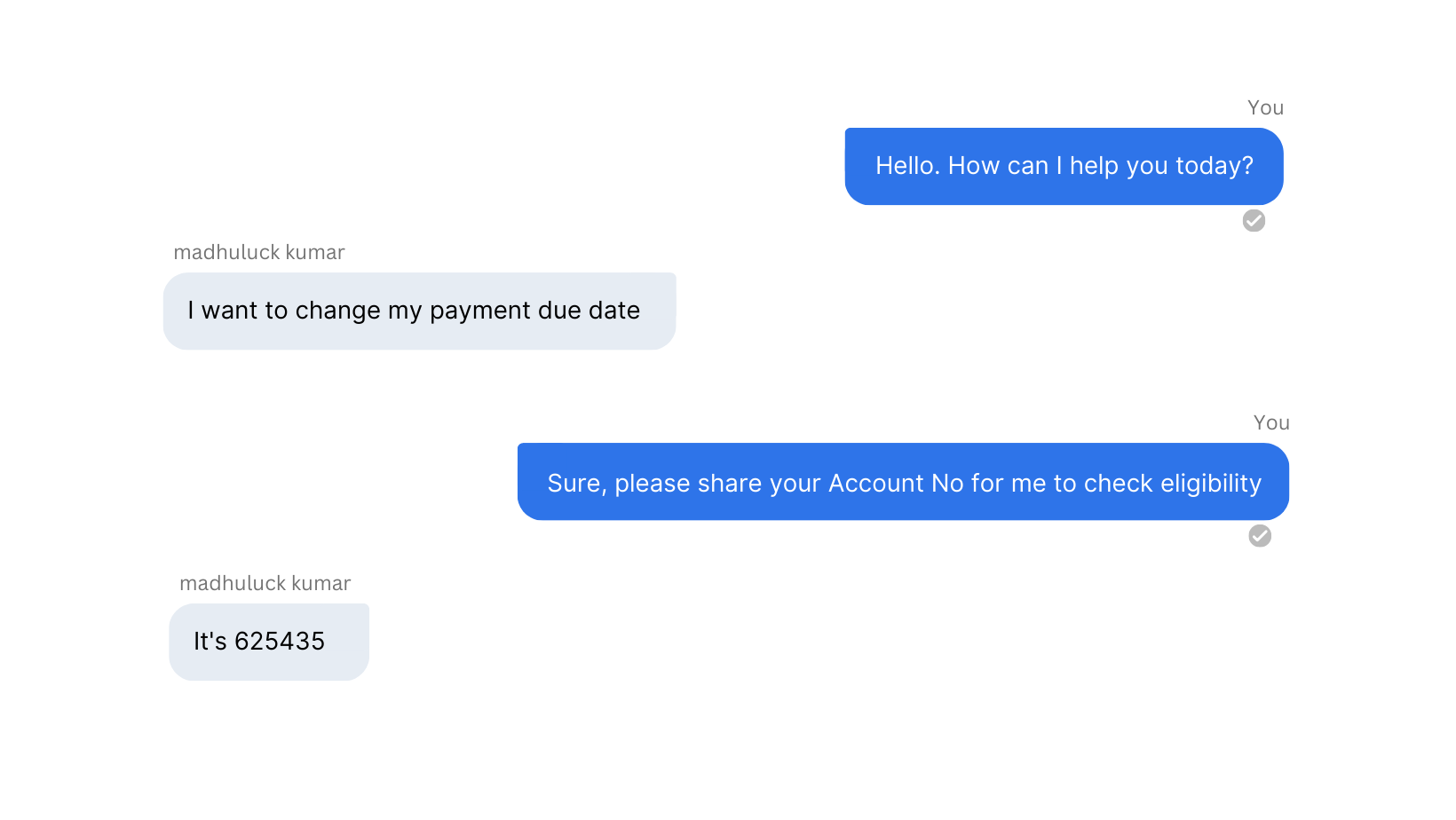

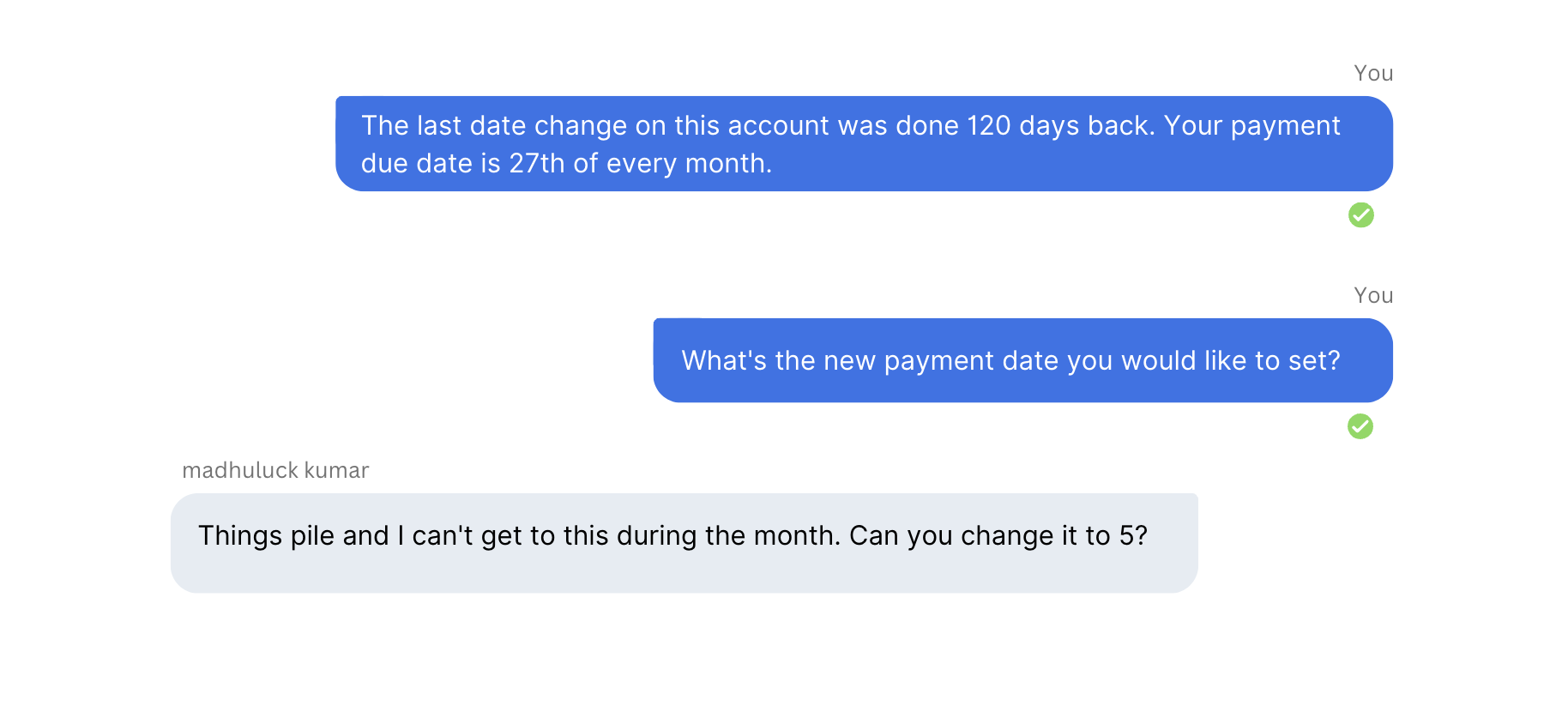

In this scenario, I am going to be playing the role of a contact center agent with a sample conversation of someone coming into the queue with a question.

How LLMs can Automate Conversations with Customers

Large language models can automate conversations with customers by generating welcome responses and pulling up dialog tasks that can be run side-by-side with the agent's desktop. This can streamline the entire flow from start to finish, making the process more efficient for both the agent and the customer.

.png?width=1640&height=924&name=Chat%20(1).png)

Once the chat is initiated, the intelligent virtual assistant (IVA) will generate an appropriate welcome response to the customer. In this example, we see that the customer wants to change their payment due date.

Automatically, the IVA is able to pull up the dialog task and run it alongside the agent. This whole section of the conversation can be automated — this entire workflow — from start-to-finish if the LLM and virtual assistant are set up properly with a dialog task built for this.

Automatically, the IVA is able to pull up the dialog task and run it alongside the agent. This whole section of the conversation can be automated — this entire workflow — from start-to-finish if the LLM and virtual assistant are set up properly with a dialog task built for this.

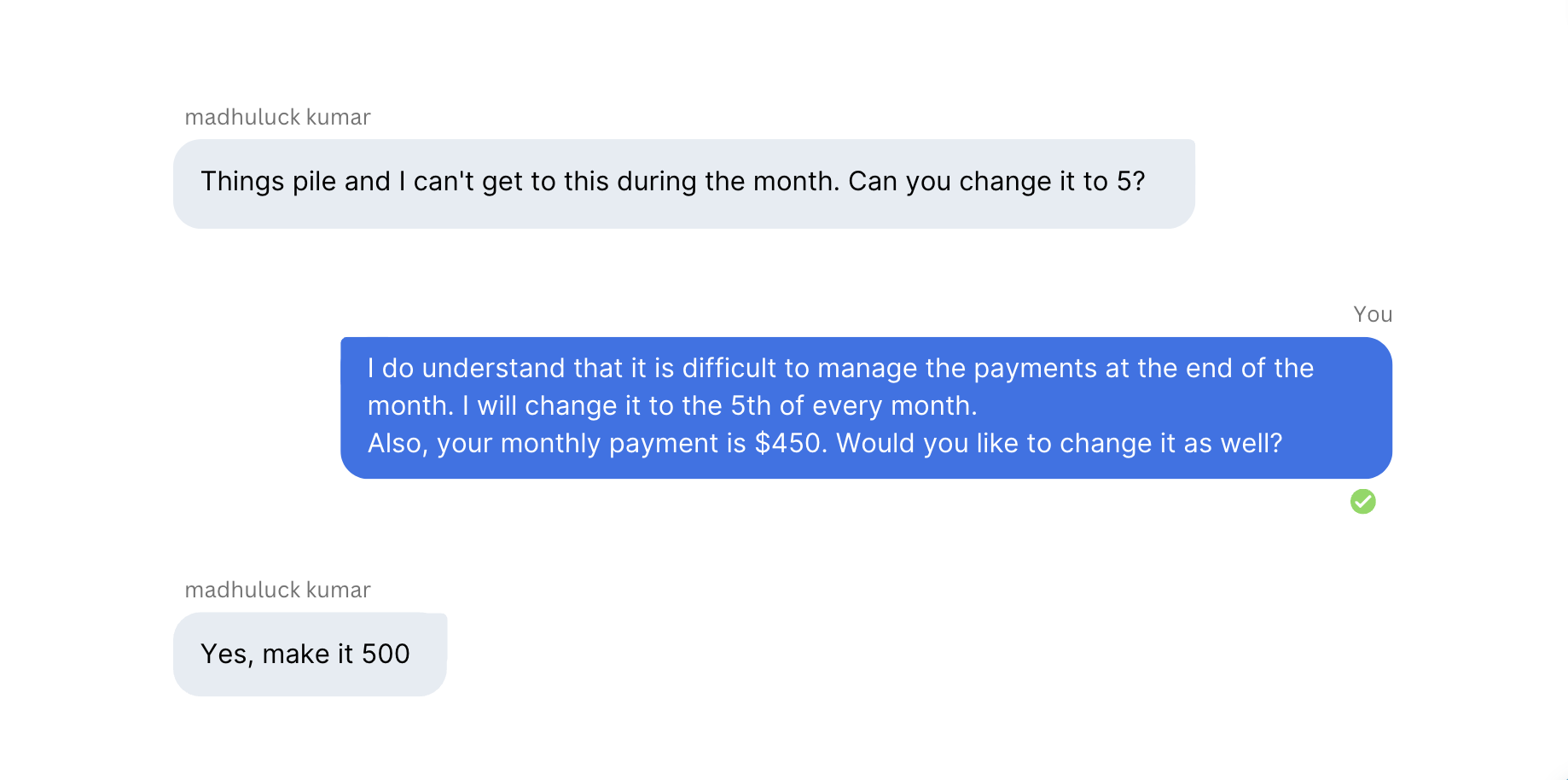

The customer responds: ‘Things pile up and I can't get to this during the month. Can you change it to five?’ This means that the customer seems to have a lot going on, so we want to provide an empathetic response.

Providing Responses with Empathy for the Customer

LLMs can also provide empathetic responses to customers, helping to elevate the conversational customer experience. By understanding the customer’s needs and providing a human-like response, using LLMs, the intelligent virtual assistant can make customers feel heard and valued.

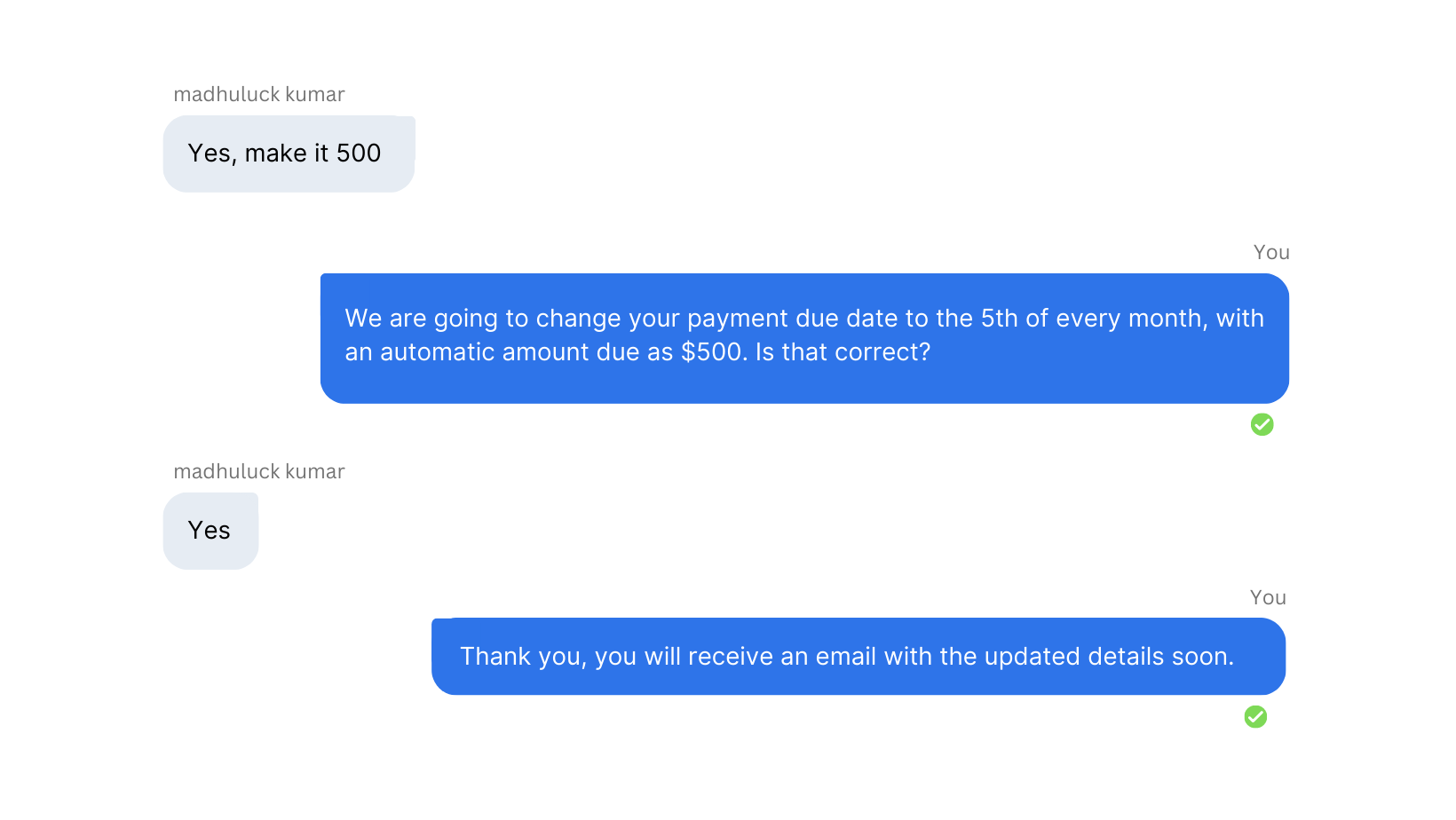

So we respond that ‘We understand that it’s difficult to manage these payments’. We are able to provide that empathy so that we can provide the next level of conversational customer experience. The next step is to finish out this flow and finish helping the user.

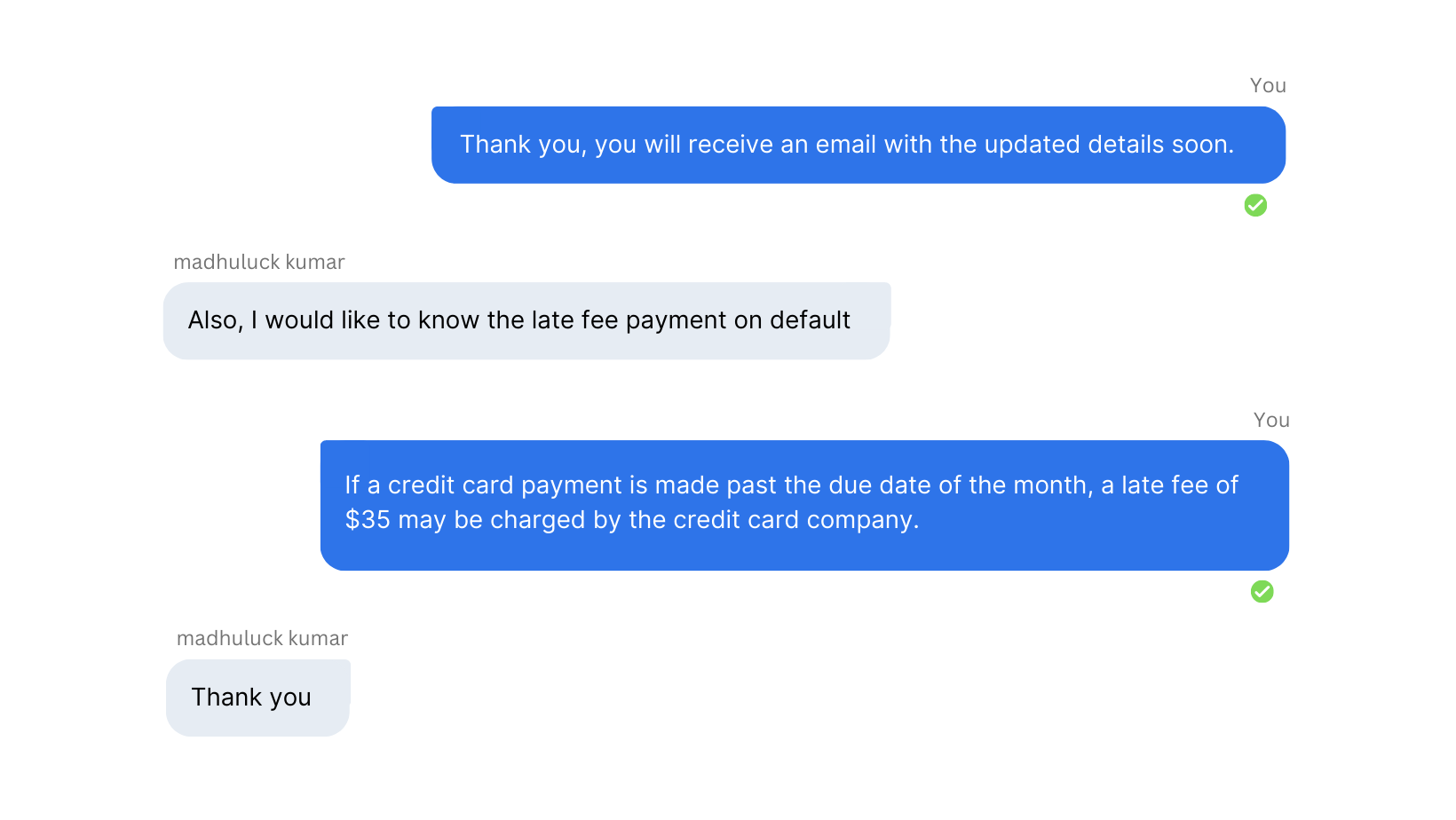

Using the Knowledge Base FAQ

Using the LLM, the intelligent virtual assistant is able to answer additional questions. Being connected to the company's knowledge base, the IVA is able to efficiently find the right Frequently Asked Questions (FAQ) and provide an accurate response right back to the user.

Not only are you driving agent efficiency and agent experience, but you’re also developing the next level of customer experience as well.

Ending the Conversation With the Customer

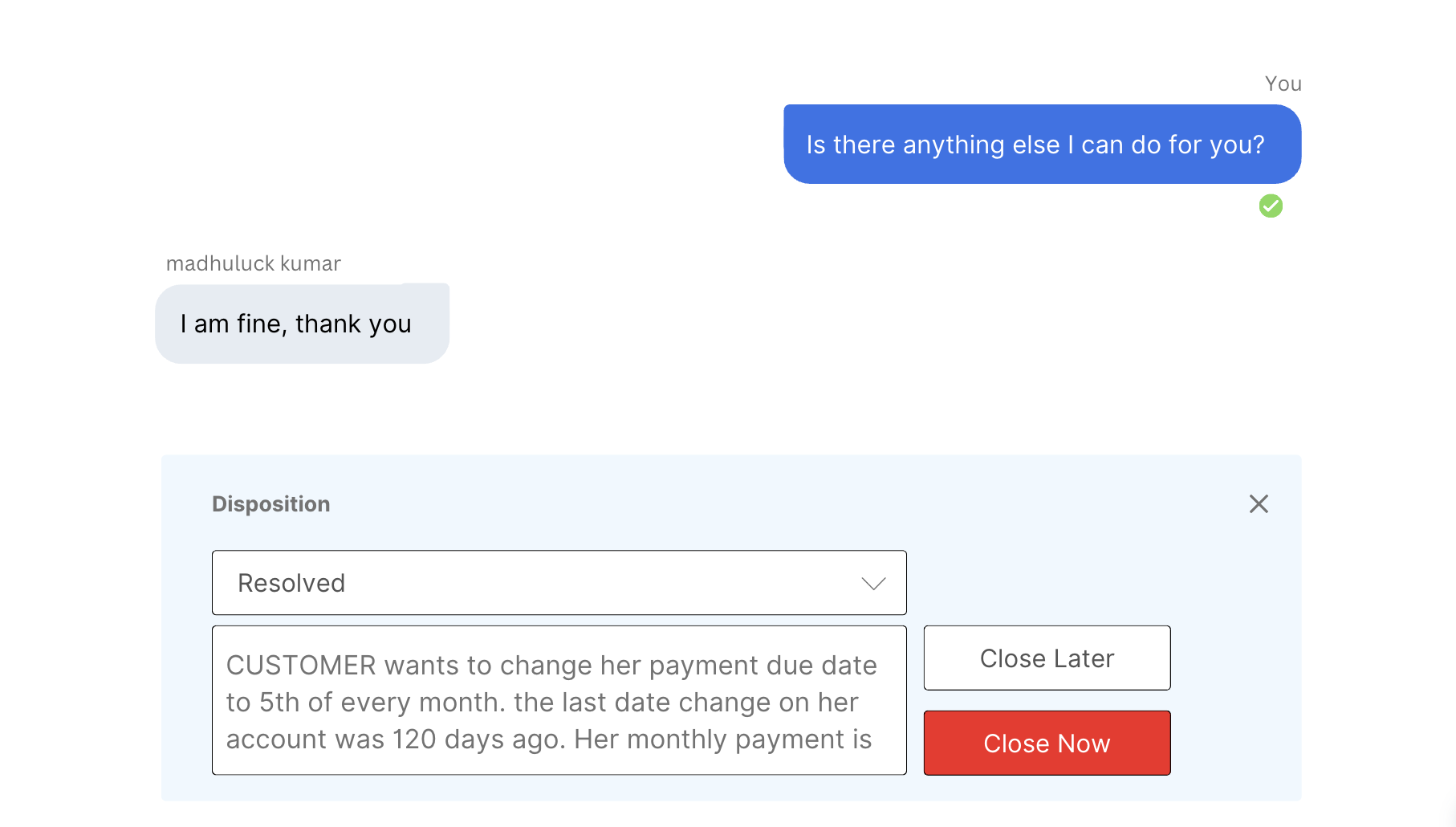

With the main requests fulfilled, we can ask and see if there is anything else that we can help the customer with. Once the conversation is over, we can see that the customer doesn’t need anything else, so we’re going to end the conversation.

Automating the Call Summary Report for the CRM

The ‘wrap-up notes’ are summarized so you can save it in a Customer Relationship Management (CRM) program. By doing this, we are driving massive efficiency through the contact center, and really elevating both the agent and customer experience.

The Business Value of Large Language Models

LLMs are a valuable tool for businesses to automate their customer service process and provide a better customer experience while also driving efficiency in their contact centers. By providing empathetic responses, quick access to information, and streamlining the entire process, LLMs can help businesses keep up with the demands of their customers and stay ahead of the competition.

The Kore.ai XO Platform empowers businesses to build advanced virtual assistants that generate natural responses with minimal development efforts. By harnessing the power of Large Language Models (LLMs) and Generative AI technologies, the platform assists in every step of Intelligent Virtual Assistant (IVA) development, thereby reducing operational efforts and achieving results in a shorter time.

Ready to achieve a faster time-to-market? Try Kore.ai today!